In 1998, I unintentionally created a racially biased synthetic intelligence algorithm. There are classes in that story that resonate much more strongly at present.

The hazards of bias and errors in AI algorithms are actually well-known. Why, then, has there been a flurry of blunders by tech corporations in current months, particularly on this planet of AI chatbots and picture turbines? Preliminary variations of ChatGPT produced racist output. The DALL-E 2 and Steady Diffusion picture turbines each confirmed racial bias within the photos they created.

My very own epiphany as a white male computer scientist occurred whereas instructing a pc science class in 2021. The category had simply considered a video poem by Pleasure Buolamwini, AI researcher and artist and the self-described poet of code. Her 2019 video poem “AI, Ain’t I a Woman?” is a devastating three-minute exposé of racial and gender biases in computerized face recognition programs – programs developed by tech corporations like Google and Microsoft.

The programs typically fail on girls of coloration, incorrectly labeling them as male. A number of the failures are significantly egregious: The hair of Black civil rights chief Ida B. Wells is labeled as a “coonskin cap”; one other Black girl is labeled as possessing a “walrus mustache.”

Echoing via the years

I had a horrible déjà vu second in that laptop science class: I abruptly remembered that I, too, had as soon as created a racially biased algorithm. In 1998, I used to be a doctoral scholar. My challenge concerned monitoring the actions of an individual’s head based mostly on enter from a video digital camera. My doctoral adviser had already developed mathematical techniques for precisely following the top in sure conditions, however the system wanted to be a lot quicker and extra strong. Earlier within the Nineteen Nineties, researchers in other labs had proven that skin-colored areas of a picture could possibly be extracted in actual time. So we determined to concentrate on pores and skin coloration as an extra cue for the tracker.

I used a digital digital camera – nonetheless a rarity at the moment – to take a couple of photographs of my very own hand and face, and I additionally snapped the fingers and faces of two or three different individuals who occurred to be within the constructing. It was straightforward to manually extract among the skin-colored pixels from these photos and assemble a statistical mannequin for the pores and skin colours. After some tweaking and debugging, we had a surprisingly strong real-time head-tracking system.

Not lengthy afterward, my adviser requested me to exhibit the system to some visiting firm executives. Once they walked into the room, I used to be immediately flooded with nervousness: the executives have been Japanese. In my informal experiment to see if a easy statistical mannequin would work with our prototype, I had collected knowledge from myself and a handful of others who occurred to be within the constructing. However 100% of those topics had “white” pores and skin; the Japanese executives didn’t.

Miraculously, the system labored moderately effectively on the executives anyway. However I used to be shocked by the belief that I had created a racially biased system that might have simply failed for different nonwhite individuals.

Privilege and priorities

How and why do well-educated, well-intentioned scientists produce biased AI programs? Sociological theories of privilege present one helpful lens.

Ten years earlier than I created the head-tracking system, the scholar Peggy McIntosh proposed the concept of an “invisible knapsack” carried round by white individuals. Contained in the knapsack is a treasure trove of privileges comparable to “I can do effectively in a difficult state of affairs with out being referred to as a credit score to my race,” and “I can criticize our authorities and speak about how a lot I worry its insurance policies and conduct with out being seen as a cultural outsider.”

Within the age of AI, that knapsack wants some new objects, comparable to “AI programs received’t give poor outcomes due to my race.” The invisible knapsack of a white scientist would additionally want: “I can develop an AI system based mostly alone look, and know it can work effectively for many of my customers.”

AI researcher and artist Pleasure Buolamwini’s video poem ‘AI, Ain’t I a Lady?’

One advised treatment for white privilege is to be actively anti-racist. For the 1998 head-tracking system, it might sound apparent that the anti-racist treatment is to deal with all pores and skin colours equally. Actually, we are able to and will be sure that the system’s coaching knowledge represents the vary of all pores and skin colours as equally as doable.

Sadly, this doesn’t assure that every one pores and skin colours noticed by the system will likely be handled equally. The system should classify each doable coloration as pores and skin or nonskin. Due to this fact, there exist colours proper on the boundary between pores and skin and nonskin – a area laptop scientists name the choice boundary. An individual whose pores and skin coloration crosses over this resolution boundary will likely be labeled incorrectly.

Scientists additionally face a nasty unconscious dilemma when incorporating range into machine studying fashions: Various, inclusive fashions carry out worse than slim fashions.

A easy analogy can clarify this. Think about you’re given a selection between two duties. Process A is to establish one explicit sort of tree – say, elm bushes. Process B is to establish 5 forms of bushes: elm, ash, locust, beech and walnut. It’s apparent that if you’re given a set period of time to follow, you’ll carry out higher on Process A than Process B.

In the identical manner, an algorithm that tracks solely white pores and skin will likely be extra correct than an algorithm that tracks the total vary of human pores and skin colours. Even when they’re conscious of the necessity for range and equity, scientists may be subconsciously affected by this competing want for accuracy.

Hidden within the numbers

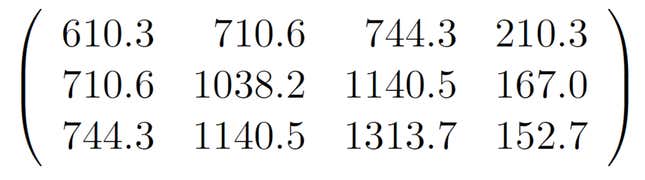

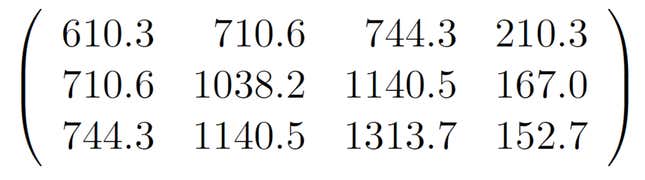

My creation of a biased algorithm was inconsiderate and doubtlessly offensive. Much more regarding, this incident demonstrates how bias can stay hid deep inside an AI system. To see why, think about a selected set of 12 numbers in a matrix of three rows and 4 columns. Do they appear racist? The top-tracking algorithm I developed in 1998 is managed by a matrix like this, which describes the pores and skin coloration mannequin. Nevertheless it’s inconceivable to inform from these numbers alone that that is in actual fact a racist matrix. They’re simply numbers, decided mechanically by a pc program.

The issue of bias hiding in plain sight is way more extreme in fashionable machine-learning programs. Deep neural networks – at present the preferred and highly effective sort of AI mannequin – typically have thousands and thousands of numbers during which bias could possibly be encoded. The biased face recognition programs critiqued in “AI, Ain’t I a Lady?” are all deep neural networks.

The excellent news is that an excessive amount of progress on AI equity has already been made, each in academia and in trade. Microsoft, for instance, has a analysis group often known as FATE, dedicated to Equity, Accountability, Transparency and Ethics in AI. A number one machine-learning convention, NeurIPS, has detailed ethics guidelines, together with an eight-point checklist of destructive social impacts that have to be thought-about by researchers who submit papers.

Who’s within the room is who’s on the desk

Alternatively, even in 2023, equity can nonetheless be the sufferer of aggressive pressures in academia and trade. The flawed Bard and Bing chatbots from Google and Microsoft are current proof of this grim actuality. The industrial necessity of constructing market share led to the untimely launch of those programs.

The programs endure from precisely the identical issues as my 1998 head tracker. Their coaching knowledge is biased. They’re designed by an unrepresentative group. They face the mathematical impossibility of treating all classes equally. They have to someway commerce accuracy for equity. And their biases are hiding behind thousands and thousands of inscrutable numerical parameters.

So, how far has the AI discipline actually come because it was doable, over 25 years in the past, for a doctoral scholar to design and publish the outcomes of a racially biased algorithm with no obvious oversight or penalties? It’s clear that biased AI programs can nonetheless be created unintentionally and simply. It’s additionally clear that the bias in these programs may be dangerous, onerous to detect and even tougher to eradicate.

Today it’s a cliché to say trade and academia want numerous teams of individuals “within the room” designing these algorithms. It could be useful if the sector might attain that time. However in actuality, with North American laptop science doctoral applications graduating solely about 23% female, and 3% Black and Latino students, there’ll proceed to be many rooms and lots of algorithms during which underrepresented teams usually are not represented in any respect.

That’s why the basic classes of my 1998 head tracker are much more vital at present: It’s straightforward to make a mistake, it’s straightforward for bias to enter undetected, and everybody within the room is answerable for stopping it.

Wish to know extra about AI, chatbots, and the way forward for machine studying? Take a look at our full protection of artificial intelligence, or browse our guides to The Best Free AI Art Generators and Everything We Know About OpenAI’s ChatGPT.

John MacCormick, Professor of Pc Science, Dickinson College

This text is republished from The Conversation beneath a Artistic Commons license. Learn the original article.

Trending Merchandise

Cooler Master MasterBox Q300L Micro-ATX Tower with Magnetic Design Dust Filter, Transparent Acrylic Side Panel…

ASUS TUF Gaming GT301 ZAKU II Edition ATX mid-Tower Compact case with Tempered Glass Side Panel, Honeycomb Front Panel…

ASUS TUF Gaming GT501 Mid-Tower Computer Case for up to EATX Motherboards with USB 3.0 Front Panel Cases GT501/GRY/WITH…

be quiet! Pure Base 500DX Black, Mid Tower ATX case, ARGB, 3 pre-installed Pure Wings 2, BGW37, tempered glass window

ASUS ROG Strix Helios GX601 White Edition RGB Mid-Tower Computer Case for ATX/EATX Motherboards with tempered glass…